The common approach of fixing the slowest machine often makes production bottlenecks worse; the real solution is to analyze the entire system’s interconnected dynamics.

- Reactive observation of Work-in-Progress (WIP) is inefficient. True identification requires analyzing the “process harmonics” between stations.

- Non-invasive tools like Digital Twins and process mining allow for risk-free testing of changes before implementation, preventing costly downtime.

Recommendation: Shift your focus from individual asset performance (OEE) to optimizing the throughput of the entire value stream by decoding the hidden relationships between processes.

For any production engineer, the pressure to increase throughput is constant. When a line slows down, the default instinct is to find the slowest machine and speed it up. We look for the most obvious symptom: a pile of Work-in-Progress (WIP) accumulating before a specific station. This reactive, asset-focused approach feels logical, but it rarely solves the underlying problem. More often than not, it simply moves the congestion to another point downstream, creating a frustrating and costly cycle of whack-a-mole problem-solving.

The core issue is that automated production lines are not just a series of independent machines; they are complex, interconnected systems. Each station’s performance directly influences the others, creating a delicate rhythm or “process harmonic.” Fixing one component without understanding its effect on the whole is like tuning a single instrument in an orchestra and expecting the entire symphony to sound better. The true bottleneck is often not a single underperforming asset but an imbalance in the flow between multiple processes, an invisible constraint in the material handling logic, or a data silo preventing real-time coordination.

But what if the key wasn’t reactive fixing, but predictive analysis? The modern approach to bottleneck identification operates on a fundamentally different principle: diagnose the system, not just the symptom. It involves leveraging non-invasive, data-driven methods to understand the entire value stream without ever needing to press the stop button. This strategy moves beyond simple observation to predictive modeling, simulation, and a deep analysis of system-wide dynamics.

This guide provides a systematic framework for just that. We will deconstruct the common causes of hidden bottlenecks and explore the analytical tools and strategies required to pinpoint them in real time. By shifting from an asset-centric to a system-centric view, you can unlock sustainable gains in throughput and efficiency.

Summary: A Systematic Guide to Uncovering Hidden Production Constraints

- Why Increasing Speed at Station A Causes a Jam at Station B?

- How to Use Digital Twins to Test Production Line Changes Risk-Free?

- AGVs vs Conveyor Belts: Which Material Handling System Offers More Flexibility?

- The Single Point of Failure Risk That Can Stop Your Entire Factory

- When to Upgrade Machinery: The Sweet Spot Between Depreciation and Obsolescence

- Why Adaptive Signal Control Reduces Commute Time by 20%?

- How to Fix Data Silos That Prevent AI From Reading Your Machines?

- Predictive Automation: How to Eliminate 30% of Manual Data Entry Tasks?

Why Increasing Speed at Station A Causes a Jam at Station B?

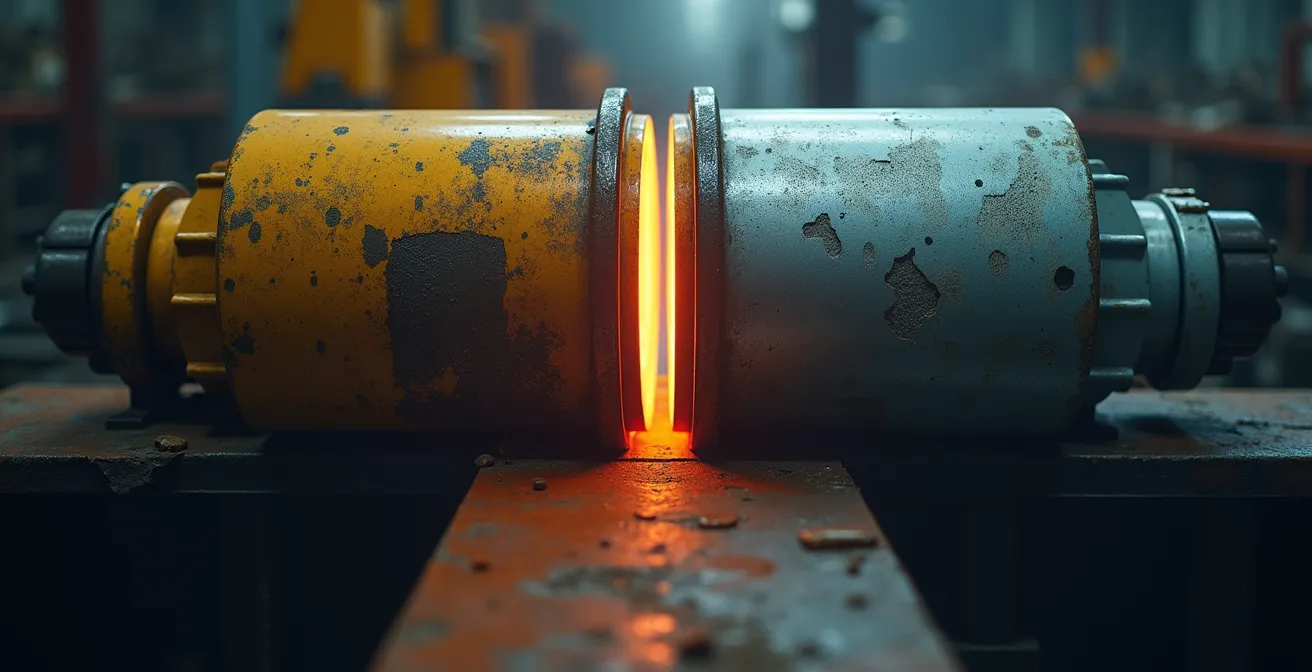

The counterintuitive reality of production lines is that local optimization often leads to global inefficiency. When you increase the speed of a single station (Station A) without considering the capacity of the next (Station B), you’re not eliminating the bottleneck; you’re just shifting its location. This happens because the line operates as an interconnected system governed by process harmonics—the balanced rhythm of flow between stations. Disrupting this rhythm by feeding material to Station B faster than it can process it creates a new pile of WIP, increases handling, and puts stress on downstream equipment.

This phenomenon is a core tenet of the Theory of Constraints (TOC), which states that the throughput of any system is determined by a single constraint. “Improving” any non-constraint part of the system is not just a waste of resources; it’s actively harmful as it increases inventory and operational costs. The goal is not to make every machine run at 100% capacity, but to subordinate the entire production schedule to the rhythm of the true bottleneck.

To achieve this, you must stop looking at individual machines and start mapping the flow between them. By identifying the “drum” (the constraint that sets the pace), establishing a “buffer” (a controlled amount of WIP to protect the drum), and using a “rope” (a signal to release new work), you can harmonize the entire line. This DBR (Drum-Buffer-Rope) method ensures that you’re not just moving a problem around but are actually increasing the systemic throughput of the entire factory.

Action Plan: 5 Steps to Map Process Harmonics Between Stations

- Start with a clear question: Pinpoint where flow is breaking down, then gather only the data that answers that specific question to avoid data overload.

- Visualize process-level performance: Shift focus from individual asset metrics (OEE) to the performance and flow between processes.

- Use exception-based alerts: Configure your monitoring system so that attention is only drawn to what is truly deviating from the target performance.

- Implement Drum-Buffer-Rope (DBR): Let the constraint set the rhythm (Drum), use a strategic buffer to ensure the constraint is never starved (Buffer), and regulate new work entry based on the Drum’s pace (Rope).

- Leverage live dashboards: Use real-time data visualization and clear digital work instructions to maintain system balance and ensure the constraint is always utilized effectively without being overloaded.

How to Use Digital Twins to Test Production Line Changes Risk-Free?

One of the greatest challenges in bottleneck resolution is the risk associated with making changes. Halting a line to test a new configuration or re-routing flow can lead to significant downtime and lost revenue. This is where Digital Twins (DT) provide a revolutionary, non-invasive solution. A Digital Twin is a high-fidelity virtual model of your physical production line, continuously updated with real-time data from sensors on your machines.

Instead of experimenting on the live factory floor, you can use the Digital Twin to run “what-if” scenarios in a completely virtual environment. Want to know what happens if you increase the speed of a conveyor, change a robot’s cycle time, or introduce a new piece of equipment? You can simulate these changes and accurately predict their impact on throughput, WIP levels, and overall system performance. This allows you to validate your optimization strategies and identify any unintended consequences—like creating a new bottleneck downstream—before committing a single dollar or minute of downtime.

This data-driven foresight transforms bottleneck analysis from a reactive guessing game into a predictive science. By integrating real-time data, the Digital Twin becomes more than just a static model; it’s a living, breathing replica of your operations, enabling managers to explore various strategies and choose the most effective ones with a high degree of confidence.

Case Study: Siemens Tecnomatix for Pharmaceutical Bottleneck Analysis

A pharmaceutical manufacturer was struggling with production inefficiencies. They developed a Digital Twin using Siemens Tecnomatix Plant Simulation software, which accurately replicated their physical production process. The simulation allowed them to identify hidden bottlenecks and inefficiencies with high accuracy. The primary impact was establishing the Digital Twin as a powerful decision-support tool. By integrating real-time data and running detailed simulations, managers could explore different operational strategies risk-free, ultimately choosing the most effective ones to improve production flow, reduce risks, and optimize resource allocation.

AGVs vs Conveyor Belts: Which Material Handling System Offers More Flexibility?

A production line’s material handling system is its circulatory system, and its design can either create or alleviate bottlenecks. The traditional choice, the conveyor belt, is highly efficient for linear, high-volume production of a single product. However, its rigidity is its greatest weakness. A breakdown in one section of a conveyor can halt the entire line, and reconfiguring the layout for a new product line is a massive undertaking. The bottleneck blast radius of a conveyor failure is enormous.

In contrast, Automated Guided Vehicles (AGVs) offer a paradigm of flexibility. These autonomous mobile robots transport materials along configurable digital paths. If one AGV requires maintenance, others can be rerouted dynamically to bypass it. If the factory layout changes, updating the AGVs’ routes is a software task, not a construction project. This adaptability makes AGVs particularly effective in high-mix, low-volume environments where production needs frequently change. As factories become more integrated with Industry 4.0 principles, the native IoT connectivity and real-time analytics capabilities of AGVs make them a superior choice for dynamic bottleneck management. The market is reflecting this shift, as the global automated guided vehicle market is projected to reach $9.18 billion by 2030.

The choice between these systems fundamentally impacts a factory’s resilience. While conveyors excel at continuous flow, AGVs excel at adaptive flow, drastically reducing the impact and recovery time from material handling disruptions.

| Characteristic | AGVs | Conveyor Belts |

|---|---|---|

| Bottleneck Blast Radius | Smaller – affects 1-2 stations only | Large – stops entire line |

| Flexibility Score | High – adapts to layout changes | Low – fixed infrastructure |

| Traffic Jam Risk | Medium – requires fleet management | None – continuous flow |

| Recovery Time | Minutes – reroute available | Hours – manual intervention needed |

| Integration with Industry 4.0 | Native – IoT and real-time analytics built-in | Retrofit required |

The Single Point of Failure Risk That Can Stop Your Entire Factory

While performance bottlenecks throttle throughput, a Single Point of Failure (SPOF) can stop it entirely. A SPOF is any component in a system that, if it fails, will cause the entire system to stop operating. In a manufacturing context, this could be a critical machine, a central server managing the line, or even a section of a conveyor belt. The most dangerous SPOFs are the “invisible” ones—components that perform poorly or are poorly designed but don’t fail outright, silently choking the entire factory’s potential.

As the MachineMetrics Research Team notes in their analysis, a bottleneck is fundamentally a constraint where work arrives faster than the system can process it. Their guide states:

A bottleneck in manufacturing is a constraint where upstream work arrives faster than the overall production line can handle, creating congestion like the neck of a bottle that drives up costs through increased handling and lower equipment utilization

– MachineMetrics Research Team, MachineMetrics Bottleneck Analysis Guide

Identifying these hidden risks requires looking beyond machine status (up/down) and analyzing systemic indicators. These can include:

- Cycle Time Creep: Gradual increases in the time it takes to complete a process often point to a degrading component or a hidden bottleneck.

- WIP Accumulation: Monitoring the accumulation of work-in-progress at specific points remains a primary indicator of a constraint.

- Machine Utilization Imbalance: If one piece of equipment is constantly running at full capacity while others are idle, you have found an imbalance that points to a constraint.

- Graceful Degradation Design: A robust system should be designed with graceful degradation strategies, allowing it to operate at a reduced capacity during a partial failure instead of coming to a complete stop.

When to Upgrade Machinery: The Sweet Spot Between Depreciation and Obsolescence

Legacy machinery is one of the most common sources of production bottlenecks. While an old machine might still be functional, it can become a constraint due to slower cycle times, frequent unplanned downtime, or an inability to integrate with modern monitoring systems. However, replacing capital-intensive equipment is a major decision. The challenge lies in finding the sweet spot between an asset’s accounting depreciation and its functional obsolescence—the point where its negative impact on throughput outweighs the cost of upgrading.

The key to making this decision is data. Without accurate performance metrics, it’s impossible to quantify the true cost of an aging machine. This includes not just its maintenance costs, but the opportunity cost of lost production due to it being a bottleneck. By implementing modern machine monitoring platforms, manufacturers can gain instant visibility into shop floor performance, tracking metrics like OEE, cycle time, and downtime causes in real time. This data provides the concrete business case needed to justify an upgrade.

Often, the solution isn’t even a full replacement. Retrofitting older machines with IoT sensors and connecting them to a central analytics platform can provide the necessary data to optimize their performance or demonstrate their role as a constraint. This data-driven approach ensures that capital is invested where it will have the greatest impact on systemic throughput.

Case Study: Avalign Technologies’ 25-30% OEE Improvement

Avalign Technologies, a medical device manufacturer, was struggling with poor machine performance and bottlenecks due to difficulty in tracking OEE and machine downtime. After deploying MachineMetrics across four facilities, they achieved instant visibility into their shop floor. According to a case study on their implementation, the results were transformative: a 25-30% increase in OEE, more effective workforce leverage, and millions of dollars in increased capacity without purchasing new equipment. This was achieved primarily by identifying and reducing bottlenecks through data-driven insights.

Why Adaptive Signal Control Reduces Commute Time by 20%?

In city traffic management, adaptive signal control systems use real-time vehicle data to adjust the timing of traffic lights, reducing congestion and cutting commute times by up to 20%. This concept provides a powerful metaphor for managing material flow in a modern factory. A traditional production line operates on a fixed schedule, much like old traffic lights on a fixed timer. It doesn’t account for real-time variations in machine performance or material availability. This is where an “adaptive signal control” for the factory comes in.

By implementing a central control system that acts like an air traffic controller for materials, you can dynamically route AGVs, allocate resources, and adjust production schedules based on live data. This system uses real-time location systems (RTLS) to track every piece of WIP and all mobile assets. When a machine experiences a micro-stoppage, the system can instantly reroute incoming materials to a buffer zone or another available machine, preventing a minor issue from causing a major line-wide jam. This is especially relevant as the logistics segment secured the largest share of AGV implementation in 2023, at over 43%, demonstrating their critical role in optimizing internal logistics.

Implementing this requires a combination of technologies. Process mining, which analyzes event logs from your MES/ERP, can reveal how work *really* moves through the plant, often uncovering inefficient “cow paths” that deviate from the official process. This data, combined with discrete event simulation, allows you to model and test adaptive routing rules before they go live, ensuring your factory’s circulatory system is as smart and responsive as a modern city’s traffic grid.

How to Fix Data Silos That Prevent AI From Reading Your Machines?

You can have the most advanced AI algorithms and the most capable machines, but if they can’t talk to each other, their potential is wasted. Data silos are one of the most pervasive and damaging “invisible constraints” in modern manufacturing. This occurs when data from different machines, departments, or software systems (like MES, ERP, and SCADA) is trapped in isolated databases with no way to be aggregated or analyzed holistically. An AI algorithm trying to predict a line-wide bottleneck is effectively blind if it can only see data from one machine at a time.

Breaking down these silos is the foundational step for any smart factory initiative. The solution lies in creating a unified data layer or “single source of truth.” This doesn’t necessarily mean replacing all your existing systems. Instead, it often involves implementing a data abstraction layer, such as an API gateway or an industrial data platform, that can connect to various data sources, translate their different protocols and formats, and present the information in a standardized way. This unified data stream can then be fed into AI and machine learning models to perform system-wide analysis.

With a holistic view of the entire production process, AI can identify complex, inter-dependent patterns that would be impossible for a human to spot. It can correlate a slight increase in motor temperature in Station A with a cycle time deviation in Station D ten minutes later, predicting a bottleneck before it even begins to form.

Case Study: Intel’s AI-Driven Data Integration

Plant managers constantly deal with data scattered across the factory floor, making it nearly impossible to predict operational outcomes accurately. A report on modern manufacturing bottlenecks highlights how Intel, a leading technology company, faced this exact challenge. By implementing AI-driven data analytics, they were able to integrate siloed information, significantly speed up operations, and reduce their time-to-insight. This success demonstrates how breaking down data silos is a critical step to eliminating modern production constraints and unlocking the power of predictive analytics.

Key Takeaways

- True bottleneck analysis requires a shift from focusing on individual machines to analyzing the entire system’s dynamics and flow.

- Non-invasive tools like Digital Twins and process mining are essential for testing changes and predicting outcomes without costly downtime.

- Flexible material handling (like AGVs) and a unified data infrastructure are critical foundations for building a resilient, bottleneck-resistant factory.

Predictive Automation: How to Eliminate 30% of Manual Data Entry Tasks?

A surprising source of production inefficiency and data inaccuracy is manual data entry. When operators have to manually log downtime codes, production counts, or quality checks, it introduces the potential for human error, delays, and subjective reporting. This “dirty data” can mask the true root causes of bottlenecks, leading analytics efforts astray. If your data says a machine was down for a “short break” when it was actually experiencing a recurring mechanical fault, you’ll never solve the real problem. Predictive automation aims to eliminate this issue by automating the data capture process itself.

This goes beyond simply connecting a machine’s PLC to a database. It involves a suite of technologies designed to digitize every aspect of the production process objectively. These include:

- Automated Production Monitoring: Platforms that connect directly to machine controllers to automatically capture cycle times, part counts, and status, eliminating human error in data entry.

- Computer Vision with OCR: Using cameras with Optical Character Recognition (OCR) to read analog gauges and displays on legacy equipment, bringing old assets into the digital fold.

- Acoustic and Vibration Sensors: Deploying sensors that use AI to listen for abnormal operational sounds or feel unusual vibrations, automatically logging potential fault events before they cause a failure.

- Automated Downtime Logging: Establishing systems that provide objective, automatically generated downtime codes based on machine data rather than relying on operator-selected options.

By automating data capture, you not only eliminate a significant portion of non-value-added manual tasks but also create a pristine, objective data set. This high-quality data is the fuel for accurate bottleneck analysis and effective predictive maintenance, forming the final pillar of a truly data-driven and resilient manufacturing operation.

By adopting this systematic, data-first approach, you can move beyond reactive problem-solving and begin to architect a production environment that is not only more efficient but fundamentally more resilient. The next logical step is to begin auditing your own data infrastructure to identify the most critical information gaps.